Artificial neuronal networks (ANNs) are the cornerstone of modern artificial intelligence. Put simply, a neural network is an architectural unit inspired from the interconnected neurons in brains. In these models, there are layers of “neurons” or nodes which take input (information) and, by way of weighted connections as well as non-linear activation functions, fashion complex patterns from the data. For example, IBM describes a neural network as “a machine learning model that stacks simple ‘neurons’ in layers and learns pattern-recognizing weights and biases from data to map inputs to outputs”. This layered processing allows neural networks to excel at tasks like image and speech recognition, language understanding, and decision-making. In this article we break down these ideas step by step, from biological inspiration to real-world use cases, and even address common questions (like “will AI take over?”). Wherever helpful, we link to deeper resources – for instance, see our article on Explainable AI (XAI) for understanding how complex neural models can be interpreted.

- Artificial Intelligence and Neuroscience

- Biological Neural Networks

- Neural Network Architecture

- Artificial Neural Network Architecture

- Artificial Neural Network Diagram

- Perceptron in Neural Networks

- Convolutional Neural Networks

- Neural Artificial Intelligence

- Artificial Neural Network Example

- Artificial Intelligence in Networking

- Will Artificial Intelligence Take Over?

- Applications of Artificial Neural Networks

-

FAQs

- 1. What is DL in simple words?

- 2. What are the 4 types of AI?

- 3. Is ChatGPT AI or ML?

- 4. What are the three types of neural networks?

- 5. What are the 4 types of ML?

- 6. What’s the difference between AI and neural networks?

- 7. What is ML vs AI vs DL?

- 8. What are the four elements of deep learning?

- 9. What is a real-time example of deep learning?

- 10. is every AI a neural network?

- Conclusion

Artificial Intelligence and Neuroscience

Neural networks originate from neuroscience. Early pioneers modeled the brain’s neurons to create computing algorithms. In 1943, Warren McCulloch and Walter Pitts proposed the first mathematical neuron model. Later, in 1958 Frank Rosenblatt introduced the perceptron, one of the very first artificial neural networks for pattern recognition. IBM notes the perceptron is “essentially a linear model with a constrained output” and calls it the “historical ancestor of today’s networks.” These developments show how biological ideas seeded machine learning: our brains can learn by adjusting connections, and ANNs imitate this by adjusting weights.

Biological neural networks (the actual neurons in brains) differ from ANNs. In biology, a neural network is “a population of nerve cells connected by synapses”. A single brain neuron can connect to thousands of others via chemical synapses, sending electrochemical signals. In contrast, artificial networks are much simpler abstractions implemented in software. Still, the comparison highlights why researchers study both AI and neuroscience together: advances in one often inspire the other. For instance, connectionist AI (ANNs) and neuroscience both study how networks of units can learn to process information.

Biological Neural Networks

A biological neural network is literally the network of cells in an animal’s brain or nervous system. Wikipedia defines it as a “population of neurons chemically connected to each other by synapses”. In such networks, each biological neuron sends and receives electrical signals to/from neighbors, amplifying or inhibiting signals via synapses. Many smaller circuits combine into large-scale brain networks. These real neural networks underlie everything we think and do.

Neural networks in AI are only loosely analogous to biological ones. Biological neurons are complex and noisy; artificial neurons are idealized mathematical functions. Yet the analogy helps: AI researchers borrow terms like “neuron,” “synapse,” and “plasticity.” Modern deep learning even takes cues from neuroscience (e.g. hierarchical layers). However, unlike brains, ANNs separate memory and processing (the von Neumann model), and training is done with explicit algorithms rather than self-organized learning.

Neural Network Architecture

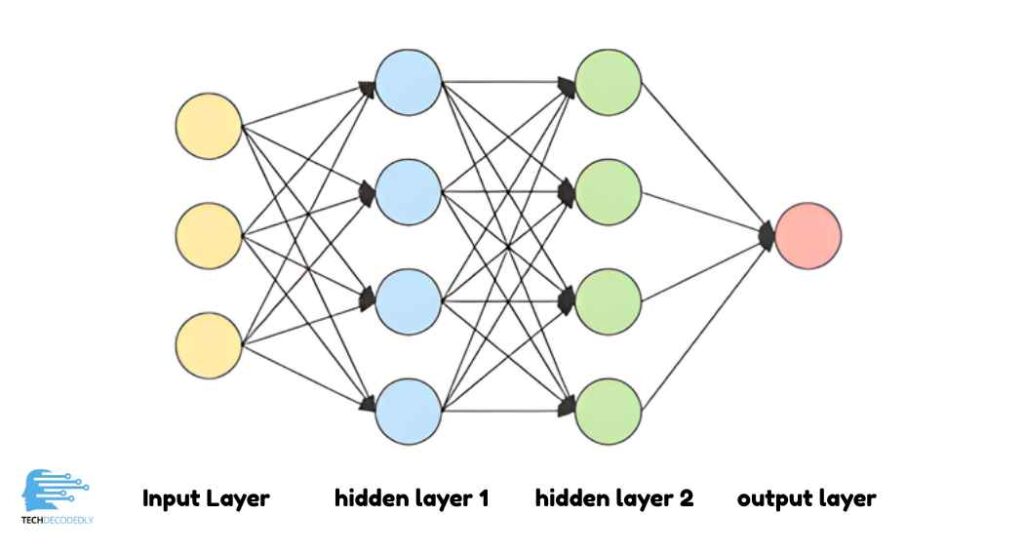

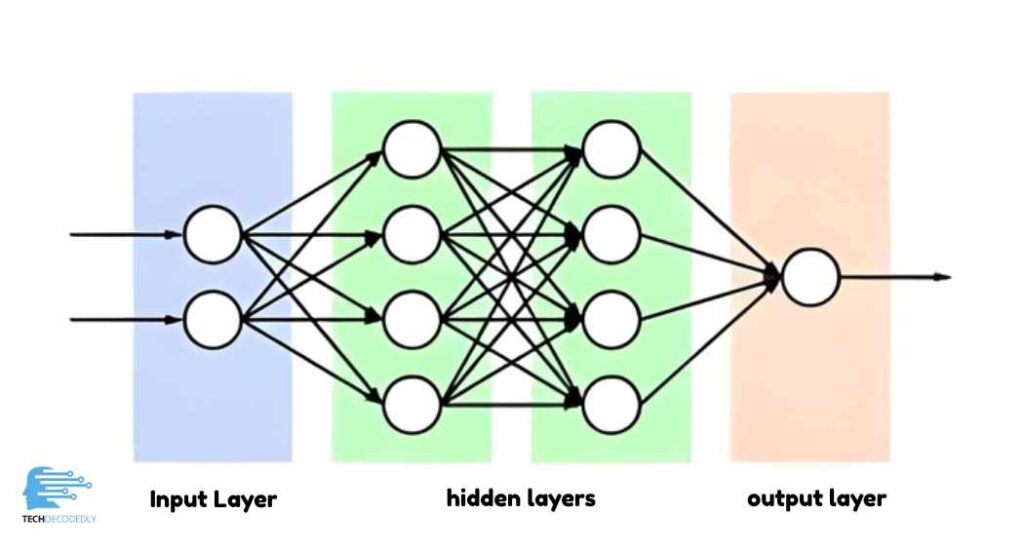

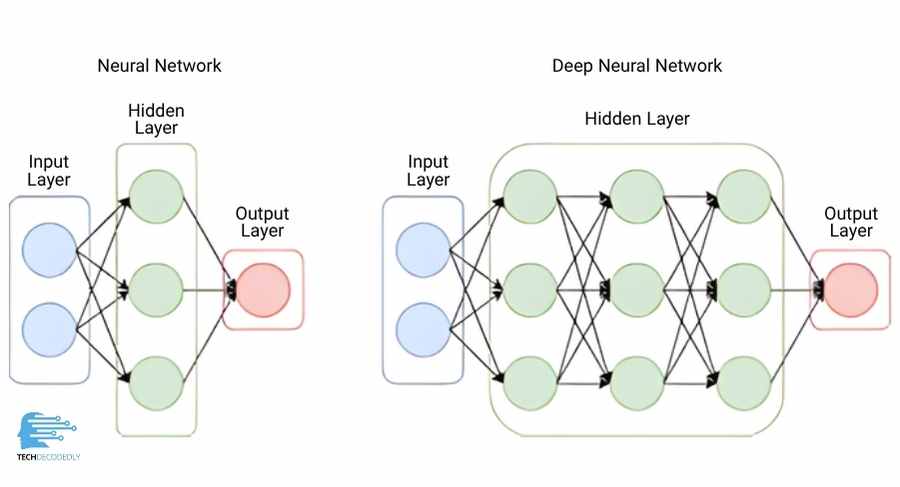

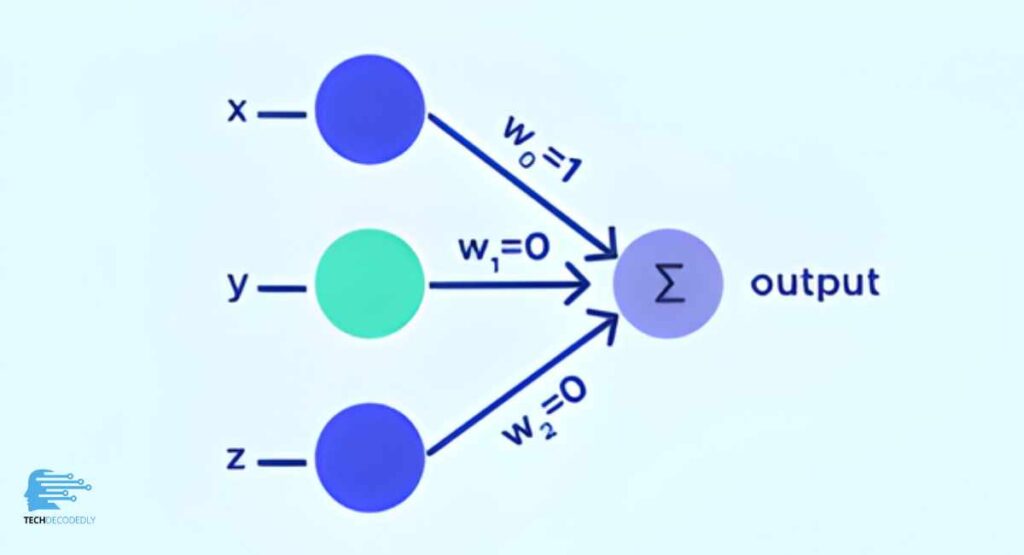

An artificial neural network includes typically one of more input layers, a hidden layer and an output layer each consisting. Each layer is made up of a number of artificial neurons (or units). These are connected by links with weights that are updated based on the output error. Information is continuously transformed, from input to output at every step.

- Input layer: Receives events in raw format or data. One example is the pixel values in an image; another, corresponding numeric values going into a table.

- Hidden layers: Hidden layers: one or more intermediate layers of neurons. Each neuron here adds the weighted sum of inputs and Tift with a bias, applying some non-linear activation function (such as ReLU, sigmoid or tanh) so that it produces what has been learned by it. These hidden layer neurons learn its undisputed art in terms of extracting features from data the data’s properties and how they are correlated.

- Output layer: Produces the final result or prediction. After linear combination, a nonlinear activation is applied to give the output (e.g. a probability or class label).

For example, IBM explains that after the hidden layers’ linear combination, “a nonlinear activation function (tanh, sigmoid, ReLU) is added to produce the final prediction”. Thus the network can solve non-linear tasks. Crucially, each connection has a weight (and each neuron a bias) that the network will learn.

Training an ANN involves adjusting those weights to make the network’s outputs correct. A common method is backpropagation with gradient descent. The basic training loop is:

- Forward pass: Input data flows through the network, computing an output.

- Error calculation: The network’s prediction is compared to the true answer using a loss function.

- Backward pass (backpropagation): The error is propagated back through the network. At each neuron, the algorithm computes how much each weight contributed to the error.

- Weight update: All weights and biases are adjusted slightly (e.g. via gradient descent) to reduce the error on this example.

This process repeats over many examples. Each iteration (or epoch) tunes the weights until the network’s performance (accuracy) improves. IBM summarizes: “the neural net trains in four steps: forward pass, error calculation, backward pass, and weight update”.

Artificial Neural Network Architecture

The architecture of an ANN refers to how many layers and neurons it has and how they are connected. A simple example is a multilayer perceptron (a feedforward network). More complex architectures include convolutional networks, recurrent networks, transformers, etc. All share the core components above (layers, weights, activations) but differ in connectivity.

For instance, fully connected (dense) layers link every neuron in one layer to every neuron in the next. By contrast, specialized layers like those in convolutional networks connect only local groups of neurons (as we’ll see below). Understanding the architecture is key to knowing what problems a network can solve. Regardless of specifics, any architecture requires training (with backpropagation) and inherits the same strengths and limitations (like being a “black box” without explainability).

Artificial Neural Network Diagram

The diagram above illustrates a simple artificial neural network architecture (source: Wikimedia Commons). It has 8 input nodes (E1–E8), two hidden layers of 12 neurons each, and 3 output nodes. Each arrow represents a weighted connection between neurons. The inputs (left) feed signals through the hidden layers; each neuron multiplies incoming values by its weights and applies an activation function. The output layer (right) then combines these signals into the final result. This layered, directed structure is typical: data flows from the input side, is transformed at each layer, and emerges at the output.

Each node in the diagram works like a simplified neuron. As one walks through the layers, patterns are detected and combined into higher-level features. For example, in image networks the first layer may detect edges, the next corners, and so on. The diagram (which has 8 inputs and 3 outputs) highlights how complex mappings (8→3) are built from many simple units. While this is a toy example, real neural network diagrams can be much larger – some have millions of parameters – but the principle of stacked layers remains.

Perceptron in Neural Networks

The perceptron is the simplest type of neural network – a single-layer binary classifier. Introduced by Frank Rosenblatt in 1958, it consists of input nodes connected to one output with adjustable weights and a threshold. IBM describes it as a linear model: “the perceptron is the historical ancestor of today’s networks: essentially a linear model with a constrained output”. In practice, a perceptron takes a weighted sum of inputs, adds a bias, and outputs 1 or 0 based on whether this sum exceeds a threshold.

Perceptrons can only solve linearly separable problems (think simple yes/no tasks). Still, they laid the groundwork for deeper networks. A multilayer perceptron (MLP) extends this by adding hidden layers, allowing nonlinear decision boundaries. The classic XOR problem famously showed a single perceptron couldn’t learn certain functions, but a two-layer network could. Thus, perceptrons are mostly of historical and educational interest today, but they introduced the key idea of learning weights from data.

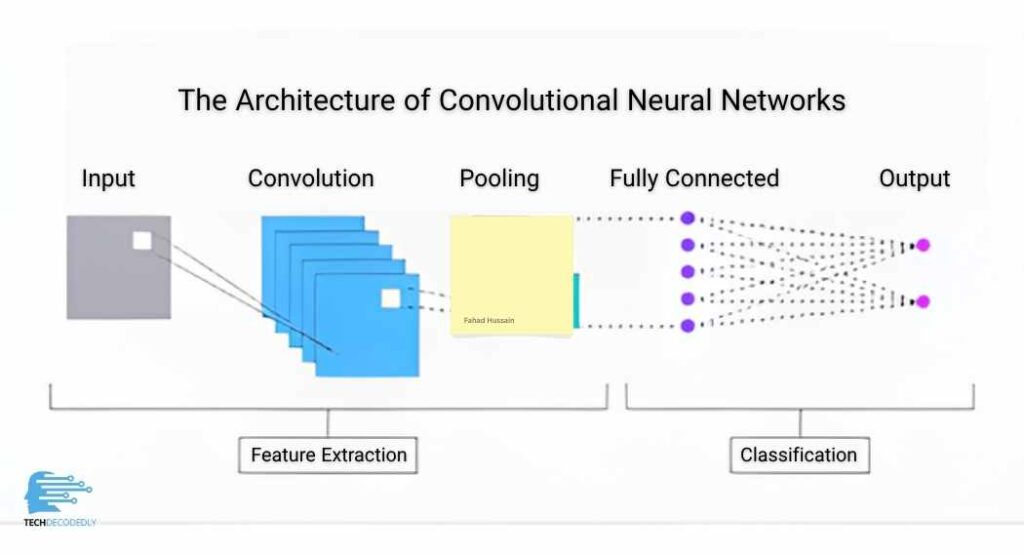

Convolutional Neural Networks

Convolutional Neural Networks (CNNs) are specialized for processing grid-like data, such as images. They include convolutional layers that apply learned filters over the input. Each filter “slides” over the image, detecting local patterns like edges, textures, or shapes. As a result, CNNs automatically learn spatial hierarchies of features. For example, early layers might detect edges, while deeper layers recognize complex structures (eyes, wheels, etc.).

CNNs are essential for computer vision. As one source notes, “CNNs have become an essential tool for computer vision”. The 1979 Neocognitron (by Kunihiko Fukushima) first introduced the convolution and pooling ideas, and modern CNNs (like AlexNet, ResNet) power image recognition today. AWS explains that CNN hidden layers “perform specific mathematical functions, like summarizing or filtering, called convolutions… each hidden layer extracts and processes different image features”. In short, CNNs are the standard go-to for tasks such as image and video classification, object detection, and any AI task involving spatial data.

Neural Artificial Intelligence

“Neural” artificial intelligence generally refers to AI systems built on neural network models. In practice, it means deep learning. Neural AI systems dominate many modern applications. For instance, IBM observes that neural networks “underpin breakthroughs in computer vision, NLP, speech recognition and countless real-world applications”. In other words, if it seems “AI” these days – from voice assistants to self-driving cars – chances are a neural network is involved.

Examples include smartphone virtual assistants (speech-to-text), translation services (language models), recommendation engines (pattern predictors), and more. The power of neural AI comes from learning from data. However, these systems remain mathematical algorithms, not sentient beings. They require data, programming, and human oversight.

Artificial Neural Network Example

Neural networks can solve many practical problems by learning patterns in data. A classic example is spam detection. As IBM illustrates, an email is fed into the network with features like keywords (“prize,” “money,” “win”). Early neurons in the network might each respond to a particular word’s presence. Later layers combine these signals: perhaps detecting that “money” plus “prize” together strongly suggest spam. The final output layer computes a probability of the email being spam, and the network flags it if this probability is high. Through training on many examples, the network “learns how to transform raw features into meaningful patterns” and make accurate predictions.

Other examples include:

- Image Recognition: Classifying objects in photos (cats vs dogs, faces in a crowd, handwritten digits). For instance, a CNN can learn to identify a cat by detecting fur textures and whiskers. Neural nets are used in medical imaging (detecting tumors) and autonomous vehicles (identifying pedestrians).

- Speech and Language: Transcribing spoken words or translating between languages. Recurrent or transformer networks can convert speech audio into text, or translate English sentences into Chinese.

- Forecasting and Prediction: Modeling time-series data like stock prices, weather, or sales trends. Neural networks capture complex temporal patterns to predict future values.

- Anomaly Detection: Spotting fraud or defects. For example, a network trained on normal credit card transactions can flag unusual spending patterns (potential fraud). IBM explicitly lists “identifying fraud, detecting anomalies” as a neural net application.

These examples illustrate the versatility of ANN: essentially “a universal function approximator”. See our coverage of AI in Finance: Key Trends and Insights for 2025 for how banks use neural nets, and Data Science in the Defense Industry for military applications of AI.

Artificial Intelligence in Networking

AI is also transforming network engineering and management. In networking, AI is used to automate and optimize systems (sometimes called “AIOps”). Hewlett-Packard explains that “AI in networking refers to… systems that incorporate advanced AIOps… to optimize and automate the performance, security, and management of network infrastructure.” In practice, this means using neural networks and other ML tools to auto-configure routers, balance traffic loads, and predict failures.

For example, AI can analyze usage trends and predict where more capacity will be needed, enabling proactive capacity planning. It can monitor network data in real time, detecting anomalies (like a cyberattack) faster than manual methods. Overall, combining cloud-managed networks with deep learning models creates more efficient and resilient IT infrastructure. In short, AI in networking leverages neural network algorithms to make networks self-driving and self-healing.

Will Artificial Intelligence Take Over?

A common question is whether AI, and by extension neural networks, will “take over.” The short answer: not in any science-fiction sense. Current neural networks are powerful pattern-recognition tools, but they lack general intelligence, intentions, or consciousness. As AI expert Shep Hyken puts it, “No, AI will not take over the world, at least not as it is depicted in the movies”. He notes that AI can solve complex equations and enhance tasks, but it cannot empathize or act on its own motives.

We remain in control of AI’s use. Large tech figures stress that AI is a profound technology (Google’s Sundar Pichai calls it “more profound than fire or electricity”), but they also emphasize responsible development. Good regulations, ethics, and human oversight are key. In practice, neural networks are tools: they do what we train them to do. So while neural AI will continue to grow in capability, experts agree it will remain under human guidance (see also concerns in explainable AI (XAI)). The biggest immediate changes from AI will be automation of tasks and new innovations – not a robot uprising.

Applications of Artificial Neural Networks

Neural networks already power countless real-world applications across industries:

- Computer Vision: Image and video analysis. CNNs enable facial recognition, medical image diagnosis, self-driving car vision, etc…

- Natural Language Processing: Understanding text and speech. Transformer models (like those behind chatbots) translate languages, summarize text, and power virtual assistants.

- Speech Recognition: Converting voice to text (as used in phone assistants and dictation).

- Forecasting & Time Series: Predicting stock prices, weather patterns, or product demand. Recurrent and transformer networks excel at these sequential tasks.

- Anomaly & Pattern Detection: Fraud detection in finance, defect detection in manufacturing, or identifying unusual network traffic. IBM explicitly mentions fraud and anomaly detection as neural net uses.

- Games and Reinforcement Learning: Training agents that learn to play games or optimize strategies. (For example, neural nets are used in DeepMind’s AlphaGo, though reinforcement learning is an AI subfield of its own.)

These applications drive innovation in healthcare, banking, entertainment, defense and more. They illustrate the breadth of ANN use – from fraud prevention in finance to autonomous drones in defense (see our posts on [AI in Finance] and [AI in Defense] for case studies). Even non-technical fields are influenced: for instance, technology trends show up in consumer products (see our Nike Tech Fleece Style Guide 2025 for a fun look at AI-inspired design in apparel!).

In all these cases, the neural network’s architecture is chosen to fit the problem (e.g., CNNs for images, RNNs/transformers for sequences), and it is trained on relevant data. The result is a model that can make predictions or decisions with minimal human intervention. However, as neural models grow more complex, explainability becomes important. Researchers use Explainable AI techniques to interpret network decisions – you can read more about this in our XAI article.

Key Takeaways: Neural networks are a core AI technology inspired by the brain’s structure. They consist of layered neurons (input, hidden, output) that learn via weights, biases, and activations. Training uses forward/backward passes to adjust those weights. With enough data and layers, ANNs can approximate almost any function and power many AI applications. Today, ANNs excel at vision, language, and pattern tasks, but they are still tools under human control (not sentient entities). As AI and computing advance, neural network techniques continue to evolve (e.g. transformers, deeper nets), making “neural AI” an active frontier of research and industry.

FAQs

1. What is DL in simple words?

Deep Learning (DL) is a type of machine learning that uses artificial neural networks to learn patterns from large amounts of data. For example, ChatGPT uses deep learning models trained on huge datasets to understand and generate human-like text.

2. What are the 4 types of AI?

Commonly, AI is categorized into four conceptual types:

- Reactive Machines

- Limited Memory AI

- Theory of Mind AI (conceptual/emerging)

- Self-Aware AI (theoretical)

These describe how advanced the AI’s reasoning capabilities are.

3. Is ChatGPT AI or ML?

ChatGPT is an AI application powered by machine learning, specifically a large language model (LLM). It analyzes text input, understands context, and generates human-like responses.

4. What are the three types of neural networks?

Three foundational types of neural networks are:

- Single-Layer Perceptron

- Multilayer Perceptrons (MLPs)

- Feedforward Neural Networks (FNNs)

These form the base for modern deep learning models.

5. What are the 4 types of ML?

Machine learning is commonly divided into four types:

- Supervised Learning

- Unsupervised Learning

- Semi-Supervised Learning

- Reinforcement Learning

6. What’s the difference between AI and neural networks?

Artificial Intelligence is the broad field of making machines mimic human intelligence.

Neural networks are a specific technique within AI that learn from data using interconnected nodes (neurons).

So: All neural networks are AI, but not all AI uses neural networks.

7. What is ML vs AI vs DL?

- AI: The broad science of making machines intelligent.

- ML: A subset of AI using algorithms to learn patterns from data.

- DL: A subset of ML using deep neural networks for complex tasks like vision, language, or speech.

8. What are the four elements of deep learning?

In education contexts, “deep learning” may refer to a learning framework with:

- Learning Partnerships

- Learning Environments

- Leveraging Digital Technologies

- Pedagogical Practices

(Important note: This is not related to AI deep learning — it’s an educational model.)

9. What is a real-time example of deep learning?

Self-driving cars are a classic example. They use deep learning to recognize road signs, pedestrians, traffic lights, and obstacles, enabling real-time decision-making.

10. is every AI a neural network?

No. AI is a broad field. Neural networks are only one technique. Many AI systems use rules, statistics, search algorithms, or symbolic reasoning without any neural networks.

Conclusion

Understanding artificial neural networks is essential to understanding how modern AI works. From simple perceptrons to advanced deep learning architectures like CNNs, RNNs, and transformers, neural networks now power nearly every intelligent system we use—search engines, self-driving cars, medical imaging, fraud detection, robotics, translation tools, and more. Their strength comes from their ability to learn patterns from massive datasets and continuously improve accuracy over time.

While neural networks are inspired by biological neural systems, they are not digital brains—they are mathematical models built on layers, weights, activations, and training algorithms like backpropagation. Still, neuroscience continues to influence AI research, and every innovation pushes these systems closer to more adaptive, efficient, and explainable intelligence.

As AI advances, so does its impact across industries such as healthcare, finance, security, networking, and automation. The future of AI will rely heavily on more interpretable, energy-efficient, and scalable neural network designs. Whether you’re a beginner or a researcher, understanding these fundamentals gives you the foundation to explore deeper topics like AI ethics, explainable AI, neurosymbolic systems, and next-generation neural architectures.

Neural networks are not just powering today’s AI—they are shaping the future of intelligent technology.

TechDecodedly – AI Content Architect. 4+ years specializing in US tech trends. I translate complex AI into actionable insights for global readers. Exploring tomorrow’s technology today.

Pingback: Microsoft AI News Today: Latest Updates & Breakthroughs