In 2025, AMD (NASDAQ: AMD) has positioned itself as a rising star in the artificial intelligence arena. Once known primarily for CPUs and graphics chips, AMD is now landing marquee AI deals – from a multi‐year cloud contract with OpenAI to Oracle’s purchase of tens of thousands of AMD GPUs. These partnerships, together with record‐breaking supercomputer projects, have helped push AMD’s revenue and stock to new highs. Analysts note AMD’s data center revenue jumped 36% year‐over‐year in Q3 2025 to $9.25 billion, driven by “strong AI chip demand” and fresh customer wins. Yet despite the buzz, AMD’s stock has seen ups and downs recently – a classic case of profit‐taking amid a broad market rally. In this article, we survey the latest AMD AI news: major projects, product launches, financial outlook and market commentary, all backed by credible sources.

Check this new update on AI tools and Copilot developments here

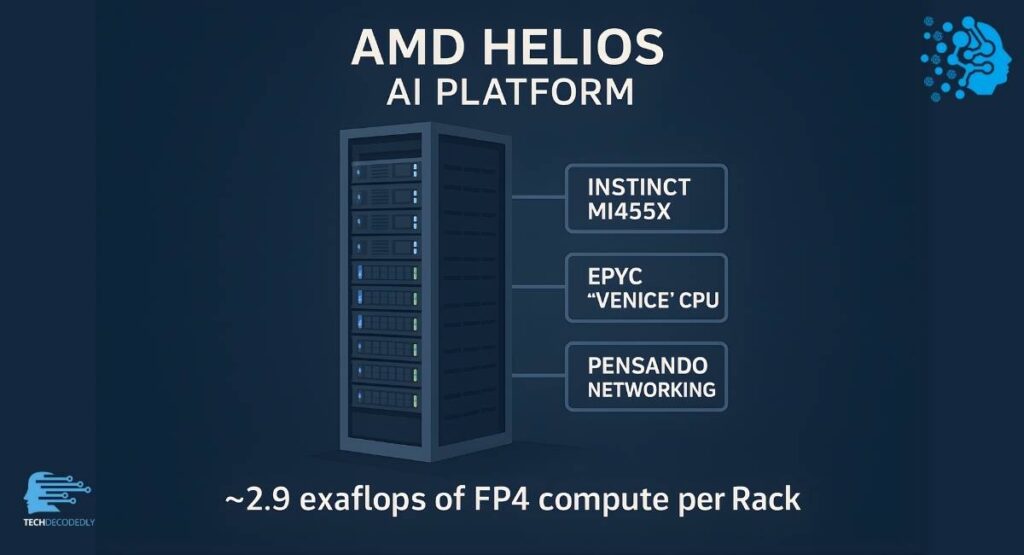

Figure: AMD’s data center innovation – AI racks like the “Helios” architecture will bring ~2.9 exaflops of FP4 compute per rack. AMD’s AI moves are not limited to Wall Street. On the infrastructure front, AMD is collaborating with partners worldwide to build next-generation AI supercomputers. For example, AMD and Hewlett Packard Enterprise (HPE) have unveiled the “Helios” rack‐scale AI platform. Helios pairs AMD Instinct MI455X accelerators with next-gen EPYC (“Venice”) CPUs and Pensando networking, delivering roughly 2.9 exaflops of FP4 AI performance per rack. Built on an open‐standard (OCP) design, Helios targets cloud providers and data centers, promising faster deployments and scale-out AI capacity.

On the global stage, AMD is powering major supercomputers that will serve both science and sovereign AI needs. In the U.S., AMD teamed with the Department of Energy on two Oak Ridge National Lab systems. The upcoming Lux AI cluster – the first U.S. “AI Factory” – will use AMD Instinct MI355X GPUs, EPYC CPUs and Pensando interconnects to train large AI models, with deployment in early 2026. In parallel, the Discovery supercomputer will use next-gen “Venice” EPYCs and MI430X GPUs (a new MI400 series part) to handle HPC and AI workloads securely within the U.S… Together, Lux and Discovery represent over a $1 billion investment in an open, domestic AI/compute stack.

For more info on AI regulatory trends, see this article.

Across the Atlantic, AMD is likewise influencing Europe’s AI ambitions. Germany’s HLRS center is installing Herder, a new HPE Cray supercomputer powered by AMD Instinct MI430X GPUs and EPYC “Venice” CPUs. Herder (to debut in 2027) will serve both traditional HPC simulations and growing AI workloads. France is building Alice Recoque, Europe’s next exascale machine, with 94 racks of AMD hardware. Alice Recoque (a 2027 project) uses “Venice” EPYC chips, MI430X GPUs (432 GB HBM4 each) and advanced cooling. It will deliver over 1 exaflop of performance while using 25% fewer racks than other exascales, thanks to 50% better energy efficiency per GPU. These EU projects emphasize compute “sovereignty” and efficiency, combining national goals with AMD’s high‐performance silicon.

Meanwhile, AMD has struck deals in the private sector. In Saudi Arabia, AMD joined Cisco and HUMAIN in a joint venture to build up to 1 gigawatt of AI infrastructure by 2030. The JV will start with a 100 MW first phase, combining HUMAIN’s data center capacity with AMD Instinct MI450 GPUs and Cisco’s networking/power solutions. CEO Lisa Su notes this partnership will “expand the capacity and global competitiveness” of the Kingdom’s AI ecosystem, even establishing an “AMD Center of Excellence” in Saudi Arabia. The goal is to give Middle Eastern innovators access to affordable, high-performance AI compute on par with global tech hubs.

On the AI training front, AMD’s hardware is already seeing use. See more on AI startup innovations here. AI startup Zyphra recently trained ZAYA1, a large mixture‐of‐experts (MoE) foundation model, entirely on AMD Instinct GPUs. Using AMD’s MI300X accelerators (each with a whopping 192 GB of HBM3 memory) and Pensando networking, Zyphra demonstrated that large-scale model training can be efficient on AMD’s platform. In fact, Zyphra reports ZAYA1-base (8.3B parameters) outperforms models like Meta’s Llama-3 (8B) and rivals Alibaba’s Qwen3 (4B) and Google’s Gemma (12B) on benchmarks. Crucially, the 192 GB per‐GPU capacity allowed simpler training (no sharding of “experts” layers) and even 10× faster model saving due to optimized I/O. “Our results highlight the power of co-designing model architectures with silicon,” said Zyphra’s CEO, underscoring how AMD’s open ROCm ecosystem and memory-rich GPUs facilitated the feat. (See AMD press release for full details: AMD Powers Frontier AI Training for Zyphra

AMD vs. NVIDIA: AI Chip Comparison

A natural question is how AMD stacks up against market leader NVIDIA. Historically, NVIDIA has led the AI GPU market (with its A100/H100 cards), but AMD’s new Instinct chips are closing the gap. For example, the MI300X (AMD’s current flagship accelerator) packs 192 GB of HBM3 memory and an open‐source software stack (ROCm), whereas NVIDIA’s H100 offers up to 80 GB HBM2e per card with a proprietary CUDA ecosystem. AMD claims an 8‐GPU “Instinct Platform” (1.5 TB total memory) can deliver up to 1.6× the LLM inference throughput of NVIDIA’s H100 on large models. In practical terms, the MI300X can run a 70-billion-parameter Llama 4 inference on one GPU, whereas an H100 would need multiple GPUs. In a sense, AMD’s offering is like a muscle car with extra cargo space: lots of on-board memory and a plug-in interface (open OCP design) that can boost AI throughput for large models. NVIDIA’s H100, by contrast, is like a sleek Tesla – very powerful and efficient, but in an ecosystem that’s locked in.

Feature | AMD Instinct MI300X | NVIDIA H100 |

Architecture | AMD CDNA 3 (2023) | NVIDIA Hopper (2022) |

GPU Memory | 192 GB HBM3 (per card) | 80 GB HBM2e (per card) |

Peak AI Throughput | 5.3 TB/s memory bandwidth; supports FP8/FP4 | ≈3.35 TB/s (HBM2e); FP8 only |

LLM Inference (BLOOM) | 1.6× faster than H100 | Baseline (1×) |

70B LLM on one GPU | ✔ (MI300X) | ✘ |

Software Framework | ROCm (open-source) | CUDA (proprietary) |

Typical Customers | Meta, Microsoft Azure, OpenAI, Oracle | OpenAI, Meta, Google, AWS |

Use Case | Large-scale training & inference, HPC | Training & inference (data centers) |

From a market standpoint, analysts see AMD as the cheaper value option. AMD trades at roughly 11× sales (forward) vs. NVIDIA at about 23×, reflecting NVIDIA’s premium valuation. If AMD hits its growth targets, it could continue to outperform. Motley Fool analysts note AMD delivered an 82% stock gain in 2025, far outpacing NVIDIA’s 34% for the year. They expect AMD’s revenue to grow ~31–35% in 2026 (to ~$46 billion) and predict AMD’s market cap could jump ~40% higher. In contrast, NVIDIA’s massive backlog (over $20B) suggests 2026 will be good, but its growth rate is slowing from last year’s 114% surge. Given these trends, some analysts argue “AMD stock seems primed for more upside in 2026”, although they also warn that a falling NVIDIA premium could narrow the gap.

Financial Outlook and Analyst Day

On November 11, 2025, AMD held a long-anticipated Investor Analyst Day in New York, unveiling ambitious plans. CEO Lisa Su projected that the global data center chip market will reach $1 trillion by 2030, with AI driving much of that growth. AMD aims to capture a growing share: Su said data center chip revenue could hit $100 billion annually in five years. CFO Jean Hu expects AMD’s data-center business to grow 60% per year (and overall revenue 35%) for the next 3–5 years. The company is also targeting an EPS of ~$20 (up from ~$2.68 projected for 2025) in that timeframe.

To meet these goals, AMD is ramping up product development. The company reiterated plans to launch its next-generation MI400 series AI GPUs in 2026 (building on the MI300), along with “rack-scale” AI system offerings similar to NVidia’s DGX. AMD is also expanding via acquisitions: for instance it announced the purchase of MK1 (AI software tools) to bolster its ROCm stack. Su summed up the tone: “It’s an exciting market… data center is the largest growth opportunity out there, and one that AMD is very, very well positioned for”. This bullish guidance helped cement Wall Street’s confidence: AMD’s shares climbed 4% on the analyst-day news.

Stock Market Reaction and Outlook

Despite long-term bullishness, AMD’s stock has been volatile. Q3 2025 Results were excellent – $9.25 billion revenue (record high, +36% YoY) and $1.20 EPS, beating forecasts. Data-center sales jumped to $4.3 billion on strong AI demand. Yet investors sold off some stock afterward. AMD’s share price fell ~3.7% on November 5, 2025 (to ~$250) despite the beat. Commentators noted that after a 108% year-to-date rally, some profit-taking was natural. At that level, AMD’s market cap was about $406 billion (up from $75 billion a year earlier) with a high P/E of ~151.

Importantly, the strong quarter was fuelled by AMD’s new AI partnerships. Under the OpenAI deal, AMD agreed to supply up to 6 gigawatts of Instinct GPUs to power OpenAI’s data centers. OpenAI also took a ~10% stake in AMD (buying 160 million shares) as part of the arrangement. Oracle will deploy 50,000 AMD GPUs across its cloud using the new MI450 chips. These deals secure AMD tens of billions in future AI revenue and validate its tech (an “unprecedented vote of confidence” in AMD’s chips). Analysts say the ramp of these rack-scale AI systems – along with the U.S. and EU supercomputer programs – will be AMD’s 2026 growth catalyst.

By contrast, some media discussion of “AMD stock news” has centered on short-term dips or noisy rumors. Headlines like “Why is AMD stock falling today?” have surfaced briefly after swings, but often the moves trace back to profit-taking or broader tech market trends. For instance, after the Q3 beat, AMD shares later dipped again on fears of a semiconductor pullback, only to rebound on fresh guidance. In general, market strategists remain upbeat on AMD long-term, citing the company’s accelerating revenue. One Nasdaq/Motley Fool analysis notes AMD “was late to the AI scene… but AMD has outperformed Nvidia in 2025, with clocking an 82% jump in its stock price”. On forums like Reddit, some investors ask “Is AMD a buy right now?”, and the consensus among analysts is that “as long as AI demand remains strong, AMD’s growth story is intact”. However, everyone agrees: AMD’s growth (and stock) isn’t guaranteed. As the company’s own filings caution, things like competitive markets, supply issues, or broader macro shocks could alter the outlook.

In short, AMD today occupies a very different position than it did even a few years ago. It’s chasing a booming AI opportunity, riding major partnerships and government programs, while also competing with giants like NVIDIA. The company is backing its strategy with real products – memory-rich GPUs, open software, and custom AI servers – and with big promises to investors. The result is an engaging story for tech readers and analysts alike: AMD has moved from underdog to front-runner in some AI segments.

Key Takeaways: AMD latest AI news (as of late 2025) includes new infrastructure partnerships (e.g. AMD+HPE “Helios” platform, Saudi 1 GW build, U.S. AI Factory), supercomputers (Lux, Discovery, Herder, Alice Recoque), and demonstrated AI training (Zyphra’s ZAYA1 on AMD). Financially, AMD projects ~35%+ growth driven by AI and expects to triple earnings by 2030. Stock analysts note AMD’s 2025 gains and cheaper valuation vs. NVIDIA, suggesting room for more upside. Competitive Comparison (AMD vs NVIDIA): AMD’s MI300X GPUs offer 192 GB HBM3 and open ROCm (with ~1.6× LLM inference speed vs. H100), while NVIDIA’s H100 has 80 GB HBM2e and a CUDA ecosystem. AMD’s strategy appears promising, but whether “AMD stock is primed for more upside” or fated to plateau remains to be seen. For now, AMD’s AI ambitions are very real – and they’re being tracked closely by investors, technologists, and even governments alike.

FAQS

1. What is the latest AMD AI news today?

AMD recently announced new AI partnerships, GPU launches, and data center expansions, including collaborations with OpenAI and the development of its MI400 series for 2026.

2. Is AMD stock a good buy right now?

Many analysts consider AMD stock undervalued compared to competitors like Nvidia, especially with its AI and data center growth. Always evaluate your investment goals before buying.

3. Why is AMD stock falling today?

AMD stock fluctuations are often driven by broader market trends, profit-taking, or semiconductor sector news. Check AMD stock news today live for real-time updates.

4. What happened at AMD Financial Analyst Day 2025?

At AMD Analyst Day 2025, the company shared a strong AI-focused roadmap, projecting major growth in data center revenue and announcing new GPU and CPU developments.

5. How does AMD compare to Nvidia in AI performance?

AMD’s MI300X GPUs offer more memory per card (192 GB) than Nvidia’s H100, with competitive inference performance and open-source ROCm software support.

6. What is AMD doing in Europe’s AI innovation space?

AMD is powering Europe’s next-generation supercomputers like Alice Recoque and Herder, contributing to high-performance computing (HPC) and AI research across the EU.

Check this update on U.S. AI regulations here

7. What is AMD’s Instinct MI300X used for?

The MI300X GPU is designed for large AI model training and inference, often used in data centers and high-performance cloud computing environments.

8. Will AMD benefit from the global AI boom?

Yes, AMD’s growing portfolio of AI hardware, strategic partnerships, and data center solutions positions it as a key player in the next era of AI infrastructure.

9. What are AMD’s key growth drivers in 2026?

AMD’s 2026 growth is expected to be driven by AI accelerator demand, cloud partnerships (e.g., OpenAI, Oracle), and the launch of its MI400 series GPUs.

10. Should I invest in AMD or Nvidia for AI exposure?

Nvidia currently leads in AI chips, but AMD is gaining ground with open-source platforms and cost-effective alternatives. Consider diversification based on your strategy.

Conclusion

The rapid pace of developments highlighted in AMD AI News Today makes one thing clear: AMD is no longer playing catch-up—it’s shaping the future of open, scalable, and efficient AI infrastructure. With its expanding GPU roadmap, deeper cloud partnerships, and growing presence in global supercomputing projects, AMD is positioning itself as a critical force in the next era of AI computing.

For businesses, developers, and investors, this means more choice, more innovation, and more competitive pricing across the AI ecosystem. And for everyday users, AMD’s advancements translate into better performance in everything from laptops to cloud services—all while keeping AI development more open and accessible than ever.

As AI demand accelerates worldwide, AMD’s strategic direction suggests strong potential for continued growth through 2025–2026 and beyond. Whether you’re following the stock, exploring new AI hardware, or tracking the evolution of modern compute platforms, AMD’s momentum is absolutely worth watching.

In short, AMD isn’t just part of the AI race—it’s helping redefine it.

I’m Fahad Hussain, an AI-Powered SEO and Content Writer with 4 years of experience. I help technology and AI websites rank higher, grow traffic, and deliver exceptional content.

My goal is to make complex AI concepts and SEO strategies simple and effective for everyone. Let’s decode the future of technology together!

Pingback: Microsoft Copilot News Today: Latest AI Updates